Artificial intelligence, for all its impressive advancements, often grapples with a hidden yet costly problem: redundant computations. These unnecessary calculations not only consume extra time but also inflate energy usage, making AI models less efficient, more expensive, and environmentally taxing. Enter OThink-R1, a revolutionary dual-mode reasoning framework designed specifically to tackle this persistent issue head-on.

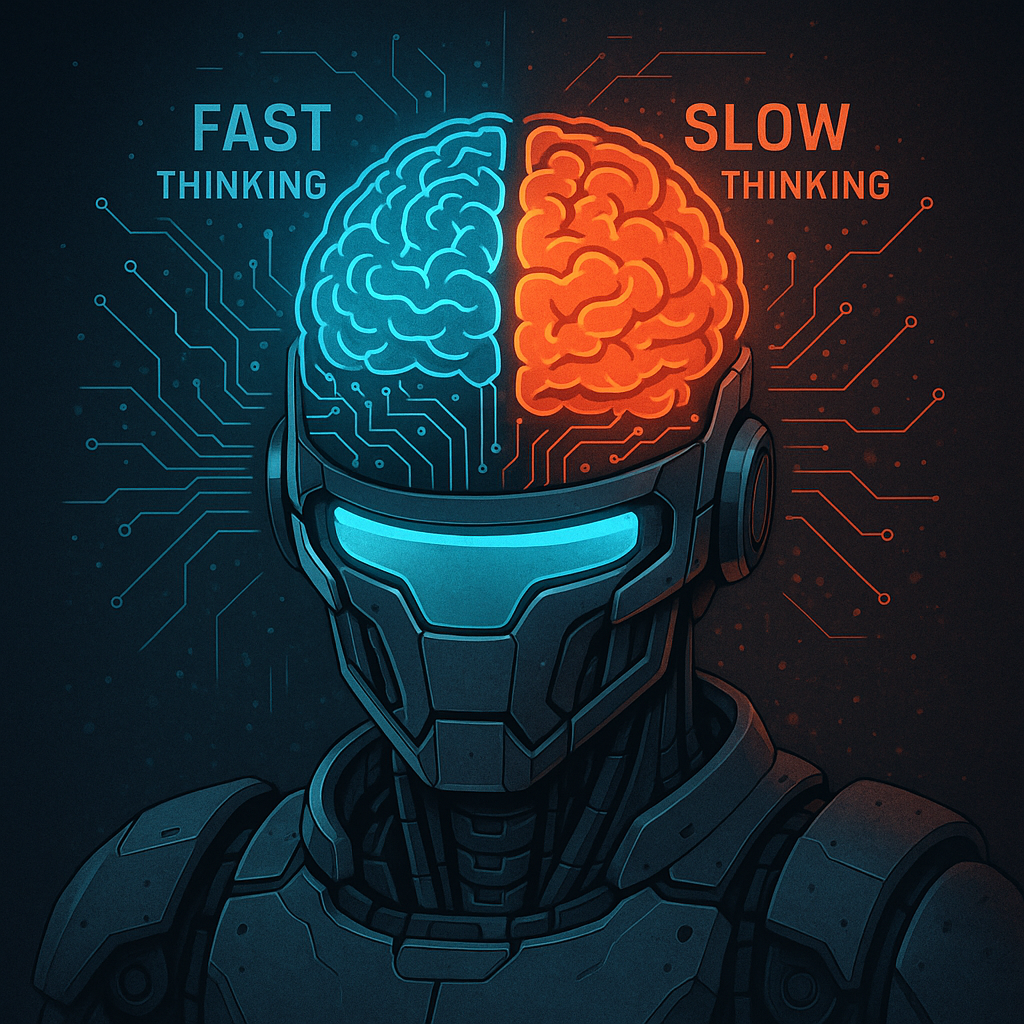

Developed to mimic the intricate workings of human cognition, the OThink-R1 framework leverages two complementary thinking modes: a fast, instinctual mode for routine and simple tasks, and a slower, more deliberate mode for complex and nuanced problems. This dual-mode approach effectively mirrors the human brain, which intuitively switches between quick judgments and deeper reflection as circumstances demand.

Why does this matter? In traditional AI systems, handling even simple tasks often involves unnecessary computational steps, resulting in wasted resources, slower performance, and higher operational costs. OThink-R1 addresses this by dynamically assessing the complexity of each task and selecting the most suitable processing mode accordingly. As a result, simpler tasks no longer carry the computational baggage typically associated with complex reasoning, and complicated tasks receive the detailed analysis they require without compromising efficiency.

The secret behind OThink-R1’s impressive capability lies in its intelligent system for identifying and pruning redundant reasoning steps. By meticulously analyzing reasoning trajectories, OThink-R1 classifies computations into essential and nonessential categories. Nonessential computations, which previously consumed valuable time and resources, are efficiently eliminated. The result is a significant reduction in computational redundancies, faster processing speeds, and overall enhanced system performance.

But how exactly does OThink-R1 decide which mode to use? At its core, the framework employs Language Reasoning Models (LRMs) trained to recognize and distinguish between essential and redundant reasoning paths. This training is enabled by constructing extensive datasets that help the framework learn the nuances of computational redundancy. The LRMs then actively guide the model, prompting it to switch seamlessly between fast and slow thinking modes depending on task complexity.

This elegant approach to computational efficiency has numerous potential applications across various sectors. For instance, in finance—an industry where milliseconds and accuracy can mean the difference between profit and loss—the deployment of OThink-R1 could substantially improve decision-making speed and precision. Similarly, healthcare providers could leverage this framework for quicker and more accurate diagnoses, ultimately improving patient care and outcomes.

Beyond industry-specific benefits, OThink-R1 also contributes positively to the broader AI ecosystem by significantly reducing energy usage. With less redundant computation, AI systems can operate more sustainably—an increasingly crucial factor as environmental concerns mount. This reduction in energy consumption not only makes AI more environmentally friendly but also reduces operational costs, making powerful AI systems more accessible for smaller organizations and research institutions.

OThink-R1's innovative approach positions it at the forefront of the AI efficiency revolution. Its potential impact extends beyond just speed and cost savings; it actively contributes to sustainability, accessibility, and practical AI deployment across diverse domains. Developers and researchers interested in exploring or integrating this groundbreaking framework can find it openly available on GitHub.

As we stand on the cusp of a new era in artificial intelligence, breakthroughs like OThink-R1 illuminate pathways to smarter, leaner, and more versatile AI systems. The future of AI now appears not just intelligent, but thoughtfully efficient.